Introduction

Misconfigurations in cloud environments and resulting data breaches frequently put AWS Simple Storage Service in the news. In “Hands-On AWS Penetration Testing with Kali Linux,” authors Benjamin Caudill and Karl Gilbert offer practical steps for conducting penetration tests on major AWS services like S3, Lambda, and CloudFormation.

S3 has enjoyed enormous popularity since its launch in 2006 due to a variety of benefits, including integration, scalability and security features. “Think of it as a one-stop shop for storage,” Caudill said.

However, Amazon S3 security largely depends on user configurations. Misconfigurations are common and often lead to data leaks and breaches, frequently putting organizations in the headlines. “An S3 bucket-related issue is overwhelmingly the primary vulnerability that comes to mind when people think of AWS, especially concerning unauthenticated attacks,” said Caudill.

The following excerpt from Chapter 7 of Hands-On AWS Penetration Testing with Kali Linux, provides a virtual lab to identify Amazon S3 bucket vulnerabilities by first setting up an insecure bucket.

As of 2023, Amazon reports that millions of customers use AWS (AWS, 2023). While AWS offers a powerful and cost-effective platform for organizations, it also presents security challenges. Traditional cybersecurity methods, such as firewalls and VPNs (Virtual Private Networks), are not enough for protecting cloud platforms compared to web penetration testing. Securing sensitive corporate data and custom applications on AWS demands a modern approach: AWS penetration testing. This guide covers AWS pentesting and the tools necessary for performing it effectively.

S3 Permissions and the Access API

S3 buckets utilize two permission systems. The first one is Access Control Policies (ACPs), this is mainly used by the web UI. ACPs offer a simplified permission system that abstracts the more detailed permission system. Alternatively, there are IAM access policies, which are JSON objects providing a more explicit view of permissions.

Permissions can apply to either a bucket or an object. Bucket permissions act as the master key; to grant someone access to an object, you must first provide them with access to the bucket and then to the specific objects within it.

S3 bucket objects can be accessed via the WebGUI or the AWS command-line interface (CLI) using the aws s3 cmdlet. This cmdlet allows you to upload, download, or delete bucket objects.

To upload and download objects using the AWS CLI, we can follow this process:

1. To start, install the AWS CLI

- sudo apt install awscli

2. Configure awscli with new user credentials, we’ll need the access2.key ID and the secret access key. We can get these via this process:

- Log in to the AWS Management Console

- Click on your username on the top right of the page

- Select the Security Credentials link from the drop down menu

- Find the access credentials section and copy the latest access key ID

- Click on the show link in the same row and copy the secret access key

3. Once you’ve acquired these, issue the configure command. Enter your access key ID and secret access key. Remember, don’t make this public to ensure your accounts are kept safe. The default region and output region can be left as is.

- aws configure

4. Once the account has been set up, it’s very easy to access the contents of the S3 bucket.

- aws s3 ls s3://example-bucket

5. If you want to list and read through directories in a bucket, add a / followed by the directory named listed from the preceding output. I.e. a folder named “test”:

- aws s3 ls s3://example-bucket/new

6. To upload a file to the s3 bucket, utilize the cp cmdlet followed by the filename and the destination bucket with the full file path

- aws s3 cp example.txt s3://example-bucket/new/example.txt

7. Delete a file on the S3 bucket by using the rm cmdlet followed by the full file path

- aws s3 rm s3://example-bucket/new/abc.txt

ACPs & ACLs

The idea of Access Control Lists (ACLs) is conceptually similar to the firewall rules that can be used to allow access to an S3 bucket. Each S3 bucket has an ACL attached to it. These ACLs can be configured to provide an AWS account or group access to an S3 bucket.

There are 4 main types of ACLs

- read: An authenticated user with read permissions are able to view filenames, size and the last modified information of an object within a bucket. They could also download any object they have access to.

- write: An authenticated user has the permission to read as well as delete objects. A user may also be able to delete objects they have no permissions to. They can also potentially upload new objects.

- read-acp: An authenticated user can view ACLs of any bucket or object that they have access to.

- write-acp: An authenticated user can manipulate the ACL of any bucket or object they have access to.

An object can only have a maximum of 20 policies in a combination of the aforementioned 4 types for a specific grantee. A grantee is referred to any individual AWS account (email address) or a predefined group. IAM accounts can’t be considered as a grantee.

Vulnerable S3 Buckets

An example of a vulnerable S3 bucket is one that has been made entirely public.

New buckets are created at the S3 home page, you can create a vulnerable, publicly accessible bucket:

- https://s3.console.aws.amazon.com/s3/

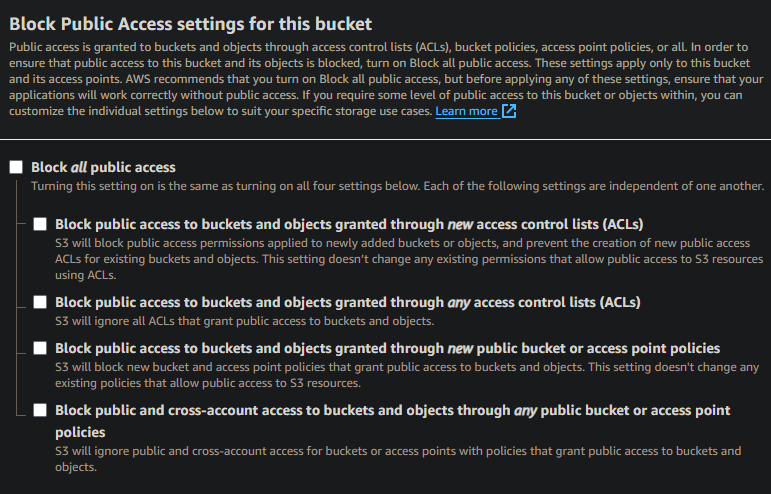

Once the bucket has been created, public access settings for selected buckets would need to be turned off. Unselect all of the checkboxes and click Save. will remove any access restrictions and settings that would prevent bucket access. AWS will likely ask you to confirm that you want to make these changes given they are considered unsecure. Note that public access was the default for a long time prior meaning these unsecure settings are present on many AWS S3 buckets.

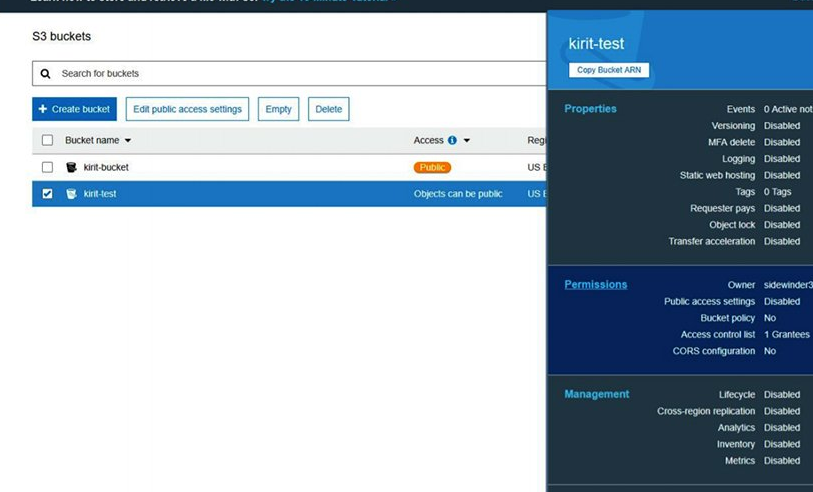

Check the permissions tab on the side panel on the newly created bucket.

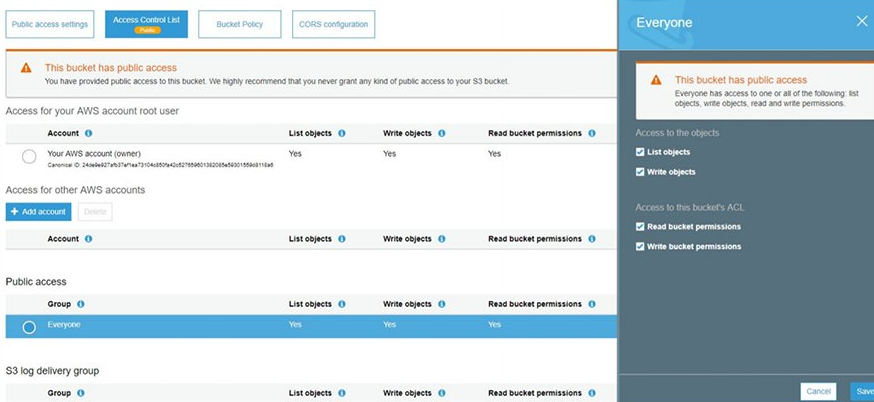

Navigate to the ACL > Public Access and select Everyone. A side panel will open, enable all the checkboxes allowing access to the List and Write objects as well as bucket ACL access.

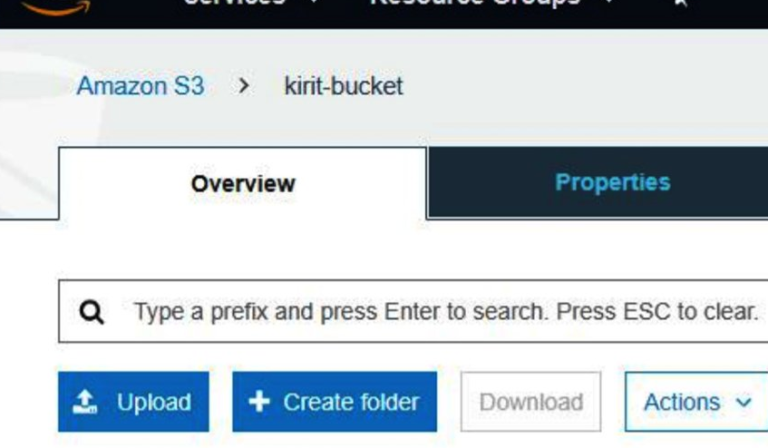

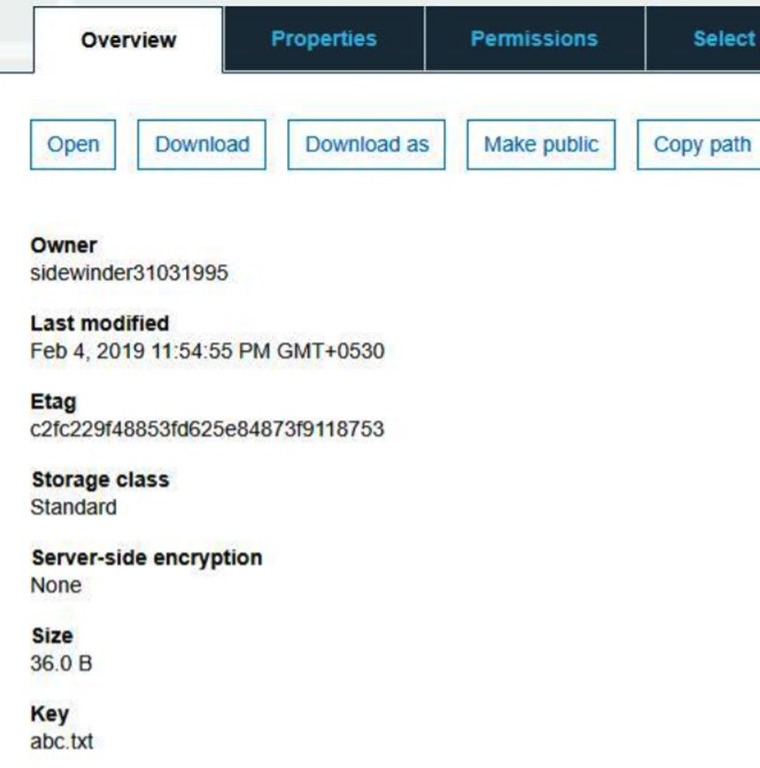

Now we have a vulnerable bucket. We can upload some objects to it and make them public, as an example, we can upload a small text file to the bucket as follows. We can upload a .txt file to the bucket.

Once the file has been uploaded, select the object to get an S3 URL to access the object from the outside. At this point, you can point your browser to the URL to access the bucket.

The Object URL is located at the bottom of the page as demonstrated in the preceding screenshot. The S3 bucket has now been set up and made available to the public (vulnerable). Anybody can read or write to this bucket (believe it or not, also vulnerable). Tools such as AWSBucketDump will be able to exfiltrate data from this bucket.

No responses yet